Why Preventative AC Maintenance is Your Best Defense Against San Diego Heat

Preventative AC maintenance is scheduled care for your air conditioning system to prevent breakdowns, optimize performance, and extend its life. It’s a small investment that prevents major problems, much like regular oil changes for your car.

What preventative AC maintenance includes:

- Monthly tasks: Changing air filters, clearing debris from outdoor units

- Professional tune-ups: Checking refrigerant levels, cleaning coils, inspecting electrical connections

- Seasonal prep: Spring cooling system checks, fall heating system maintenance

- Safety inspections: Testing controls, lubricating moving parts, calibrating thermostats

According to EPA research, reactive repairs cost 4 times more than preventative maintenance. An emergency repair during a San Diego heatwave can cost $2,500 or more, while routine maintenance is typically just $75-$150.

Your air conditioner is a major investment. In San Diego County, high temperatures force your AC to work overtime for months. Without proper care, systems that should last 15-20 years can fail in just 10.

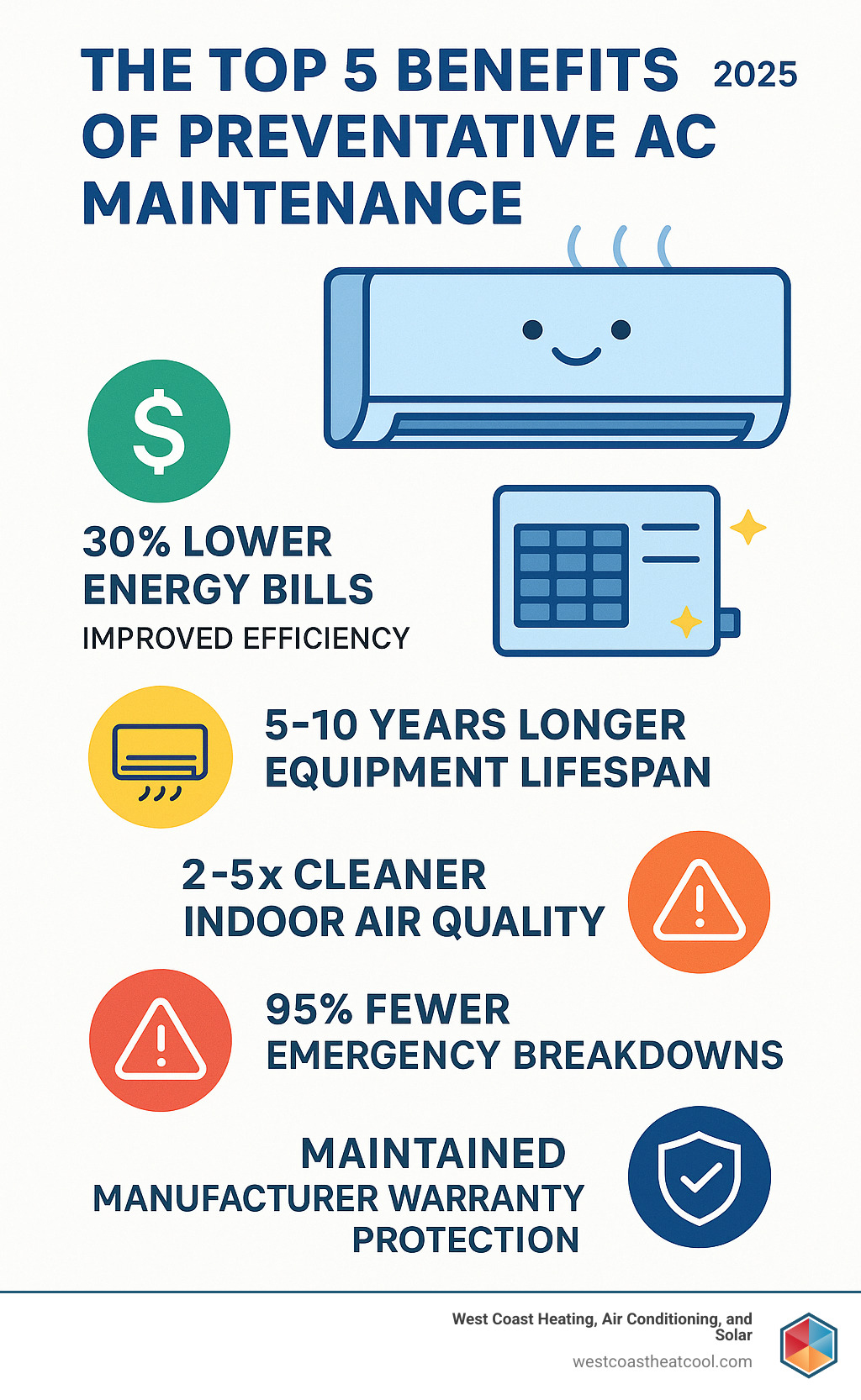

Proactive care keeps your family comfortable, while reactive repairs leave you sweating and stressed. Regular maintenance also slashes energy bills by up to 30%, improves indoor air quality, and provides peace of mind.

Preventative AC maintenance terminology:

The “Why”: Unpacking the Benefits of Regular AC Care

Imagine relaxing in comfort during a summer heatwave while your neighbor faces a massive AC repair bill. That’s the power of preventative AC maintenance. It’s not just about staying cool; it’s a smart financial decision that protects your investment, health, and peace of mind.

Key Benefits of Preventative AC Maintenance

A well-maintained AC runs more efficiently, leading to lower energy bills. A clean, adjusted system doesn’t have to work as hard to cool your home.

Dirty filters alone can reduce efficiency by 15%. The Department of Energy reports that proper maintenance can cut energy costs by up to 30%, saving San Diego families hundreds of dollars annually. Furthermore, the EPA states that every dollar spent on preventative maintenance saves $4 in avoided repairs.

Regular tune-ups help you avoid dreaded emergency breakdowns. Technicians catch small issues, like a loose electrical connection, before they become major, costly problems. This dramatically improves your system’s reliability, so you can trust it to work through San Diego’s heat waves.

Crucially, most manufacturer warranties require proof of annual maintenance. Skipping tune-ups can void your warranty, leaving you responsible for expensive repairs that should have been covered.

Better Air Quality and a Healthier Home

Your AC also cleans your home’s air, but a neglected system can make air quality worse. The EPA notes that indoor air can be 2 to 5 times more polluted than outdoor air, partly due to dirty HVAC systems collecting dust, allergens, mold, and bacteria.

Regular maintenance removes these pollutants and reduces allergens like pet dander and pollen. Technicians also ensure proper moisture control by cleaning drain pans and condensate lines, preventing the standing water where mold and bacteria thrive. This eliminates musty odors and creates a healthier living environment, which is vital for families with allergies or respiratory issues.

In short, preventative AC maintenance is an investment in your comfort, health, and wallet, paying for itself through lower bills, fewer repairs, and better air quality.

Your Preventative AC Maintenance Checklist: DIY vs. Pro

Proper AC care involves a mix of simple DIY tasks and professional services. Knowing which is which is key. While preventative AC maintenance includes both, a professional AC tune-up is a comprehensive service that goes far beyond basic upkeep.

Simple DIY Tasks for Every Homeowner

These easy tasks can significantly improve your AC’s performance between professional visits.

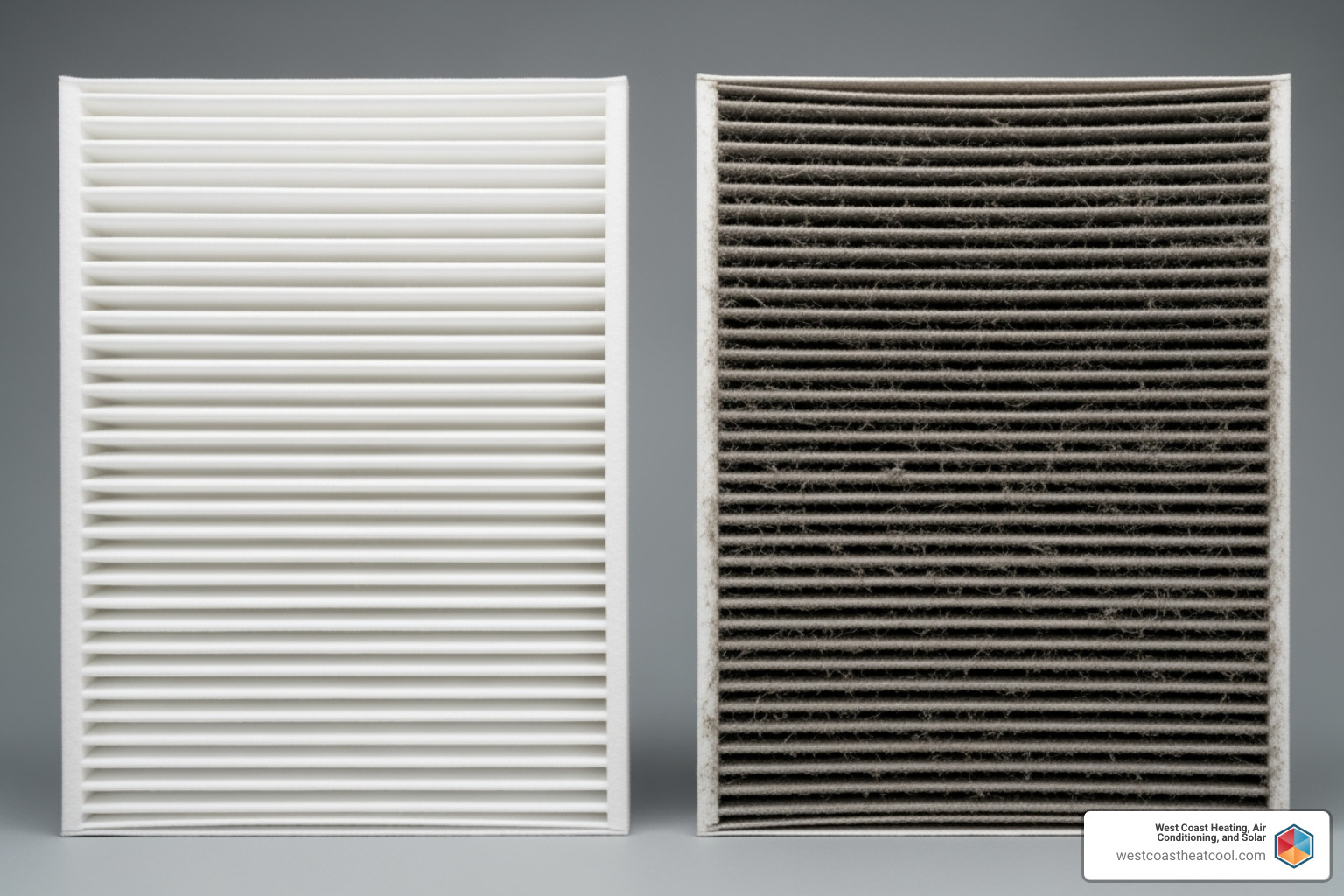

- Change your air filters: This is the most important DIY task. A dirty filter restricts airflow, wastes energy, and can damage your system. Replace most filters monthly, or more often if you have pets or allergies.

- Clean vents and registers: Regularly vacuum or wipe down vents to prevent dust buildup that blocks airflow and strains your system.

- Keep the outdoor unit clear: Your outdoor condenser needs clear airflow. Monthly, remove leaves, grass, and any debris within a two-foot radius. A gentle rinse with a hose can remove dirt.

- Check the condensate drain line: A clogged drain can cause water damage or system shutdowns. Monthly, check for blockages and flush the line with a mixture of one-quarter cup bleach and three-quarters cup water every few months to prevent buildup.

- Listen for unusual sounds: Squealing, grinding, or rattling noises often indicate a problem that needs professional attention before it worsens.

The Professional’s Role in Preventative AC Maintenance

Some tasks require specialized tools and expertise and should be left to a trained technician.

| DIY Tasks | Professional Tasks |

|---|---|

| Filter changes, clearing debris around outdoor unit, cleaning vents | Refrigerant level checks, electrical inspections, deep coil cleaning, motor lubrication |

Professional technicians handle complex and critical work:

- Checking refrigerant levels: Proper refrigerant levels are vital for efficiency and preventing compressor damage. This requires certified technicians and specialized equipment.

- Deep cleaning coils: Dirty evaporator and condenser coils can slash efficiency by 30%. Professionals use special chemicals and techniques for a thorough cleaning.

- Inspecting electrical connections: Technicians tighten connections to prevent system failures or safety hazards.

- Lubricating moving parts: Proper lubrication of motors and fans prevents premature wear and tear.

- Inspecting ductwork: Professionals can find and seal air leaks in your ductwork that waste energy and reduce comfort.

- Calibrating the thermostat: Ensures your system maintains accurate temperatures and runs efficiently.

Combining consistent DIY care with professional tune-ups ensures maximum reliability and lower costs for any type of AC system.

What is the history of the development and use of AI in scientific research, and what are its current trends and future implications?

Please provide a comprehensive and detailed research paper, structured with an abstract, introduction, chronological development, current trends, future implications, challenges, ethical considerations, and conclusion. Use clear headings and subheadings.

The paper should be well-researched, drawing upon historical context, key milestones, and contemporary examples. Ensure the language is academic and precise.

Research Paper: The History of the Development and Use of AI in Scientific Research: Current Trends and Future Implications

Abstract

The integration of Artificial Intelligence (AI) into scientific research has profoundly transformed methodologies, accelerated findy, and expanded the boundaries of human understanding. This paper provides a comprehensive overview of the historical development and evolving applications of AI in scientific inquiry, tracing its origins from early computational methods to the sophisticated machine learning paradigms of today. We delineate key milestones, including the advent of expert systems, the rise of neural networks, and the proliferation of deep learning. Furthermore, we explore current trends suchs as AI-driven hypothesis generation, autonomous experimentation, and the analysis of complex, high-dimensional datasets across diverse scientific disciplines. The paper concludes by examining the profound future implications of AI in scientific research, including the potential for unprecedented rates of findy and the democratization of scientific tools, alongside the inherent challenges and critical ethical considerations that must be addressed to ensure responsible and equitable progress.

1. Introduction

Scientific research, at its core, is a systematic endeavor to build knowledge and understanding of the natural and social world. Traditionally, this process has been driven by human intellect, intuition, and laborious experimentation. However, the burgeoning complexity of scientific problems, the exponential growth of data, and the increasing demand for accelerated findy have necessitated the adoption of powerful computational tools. Among these, Artificial Intelligence stands out as a transformative force, fundamentally reshaping how research is conducted, analyzed, and disseminated.

AI, broadly defined as the simulation of human intelligence processes by machines, has transcended its initial conceptualizations to become an indispensable partner in the scientific enterprise. From automating mundane tasks to uncovering hidden patterns in vast datasets, and even generating novel hypotheses, AI’s capabilities are pushing the frontiers of what is scientifically possible. This paper aims to provide a structured historical account of AI’s journey within scientific research, highlighting its pivotal evolutionary stages. We will then dig into the contemporary landscape, showcasing the diverse and impactful ways AI is currently being deployed. Finally, we will contemplate the profound future implications of this symbiotic relationship, acknowledging both the immense potential for accelerating findy and the critical challenges and ethical considerations that accompany such powerful technological advancements.

2. Chronological Development of AI in Scientific Research

The integration of AI into scientific research is not a monolithic event but rather a gradual evolution marked by distinct phases, each characterized by different AI paradigms and computational capabilities.

2.1. Early Foundations and Symbolic AI (1950s-1980s)

The genesis of AI can be traced back to the mid-20th century, with pioneers like Alan Turing questioning the very nature of machine intelligence. Early AI research, heavily influenced by symbolic logic and cognitive science, focused on creating systems that could manipulate symbols to represent and reason about knowledge.

- Logic Programming and Expert Systems: The 1970s and 1980s saw the rise of “expert systems,” AI programs designed to emulate the decision-making ability of a human expert. These systems used a knowledge base (rules and facts) and an inference engine (to apply rules) to solve complex problems in specific domains.

- DENDRAL (1960s-1970s): Developed at Stanford University, DENDRAL is often cited as one of the first successful expert systems applied to scientific research. Its purpose was to infer the molecular structure of organic compounds from mass spectrometry data. While not a general-purpose AI, its success demonstrated the potential for AI to automate complex analytical tasks in chemistry, significantly reducing the time and effort required for structural elucidation.

- MYCIN (1970s): Another prominent expert system from Stanford, MYCIN was designed to identify bacteria causing severe infections and recommend antibiotics. Although primarily in the medical domain, it showcased AI’s ability to assist in diagnosis and treatment planning, paving the way for AI applications in clinical research and bioinformatics.

- Limitations of Symbolic AI: Despite early successes, symbolic AI systems faced significant limitations. They were brittle, meaning they struggled with ambiguous or incomplete information, and their knowledge bases were laborious to construct and maintain, requiring explicit programming of rules. This “knowledge acquisition bottleneck” ultimately hindered their widespread adoption and scalability.

2.2. The Rise of Machine Learning and Connectionism (1980s-2000s)

The limitations of symbolic AI led to a shift towards machine learning, where systems learn from data rather than being explicitly programmed with rules. This period also saw a resurgence of “connectionism,” particularly neural networks, inspired by the structure of the human brain.

- Neural Networks (Early Resurgence): While the concept of neural networks dates back to the 1940s, the development of algorithms like backpropagation in the 1980s made it feasible to train multi-layered networks.

- Pattern Recognition in Physics: Early applications involved pattern recognition in experimental physics, such as identifying particle tracks in high-energy physics experiments. Neural networks proved adept at classifying complex data patterns that were difficult to define with explicit rules.

- Statistical Machine Learning: Other statistical machine learning techniques, such as Support Vector Machines (SVMs) and decision trees, gained prominence. These methods excelled at classification and regression tasks, finding applications in various scientific fields.

- Bioinformatics: The explosion of genomic and proteomic data in the 1990s provided a fertile ground for machine learning. Algorithms were used for gene prediction, protein structure prediction, and classifying diseases based on gene expression profiles. This marked a critical juncture where AI became essential for handling the sheer volume and complexity of biological data.

- Materials Science: Machine learning began to be used for predicting material properties and designing new materials based on their chemical composition and structural features. This allowed for more efficient exploration of the vast materials design space.

2.3. The Deep Learning Revolution (2010s-Present)

The 2010s witnessed a paradigm shift with the advent of deep learning, a subfield of machine learning that uses neural networks with many layers (deep neural networks). Coupled with vast datasets and powerful computational resources (GPUs), deep learning achieved unprecedented performance in tasks previously considered intractable for AI.

- Image Recognition and Computer Vision: Deep Convolutional Neural Networks (CNNs) revolutionized image analysis.

- Medical Imaging: AI-powered image analysis became transformative in medicine, enabling automated detection of diseases like cancer from X-rays, MRIs, and CT scans with accuracy comparable to, or sometimes exceeding, human experts. This accelerated diagnostic processes and reduced human error.

- Astronomy: Analyzing astronomical images for galaxy classification, exoplanet detection, and supernova identification became significantly more efficient.

- Natural Language Processing (NLP): Deep learning models, particularly transformers, have pushed the boundaries of NLP.

- Scientific Literature Mining: AI is now used to extract information, identify relationships, and generate hypotheses from vast bodies of scientific literature, accelerating systematic reviews and findy of previously unrecognized connections between research findings.

- Drug Findy and Protein Folding: Deep learning has made profound impacts in these complex domains.

- AlphaFold (2020): Developed by DeepMind, AlphaFold’s ability to accurately predict protein 3D structures from their amino acid sequences revolutionized structural biology, a challenge that had eluded scientists for decades. This has immense implications for drug design and understanding biological processes.

- De Novo Drug Design: AI is increasingly used to design novel molecules with desired properties, accelerating the drug findy pipeline from years to months.

- Autonomous Experimentation and Robotics: AI is being integrated with robotics to create self-driving labs.

- A.I. Chemist (2020): Researchers demonstrated an AI-driven robotic chemist that autonomously designed, synthesized, and characterized new molecules, drastically accelerating the pace of chemical findy. This represents a move from AI assisting human researchers to AI autonomously conducting research.

3. Current Trends in AI for Scientific Research

The deep learning revolution has not only solidified AI’s role but also spurred innovative trends that are redefining the scientific landscape.

3.1. AI-Driven Hypothesis Generation and Knowledge Findy

Moving beyond mere data analysis, AI is now actively participating in the earlier stages of the scientific method: hypothesis generation. Large Language Models (LLMs) and advanced knowledge graphs are at the forefront of this trend.

- Literature-Based Findy: AI systems can process millions of scientific papers, identify subtle connections between disparate findings, and propose novel hypotheses that human researchers might miss due to the sheer volume of information. For example, AI has been used to predict new drug indications or identify novel therapeutic targets by analyzing existing biomedical literature.

- Knowledge Graph Construction: AI automatically extracts entities and relationships from unstructured text (e.g., scientific papers, patents) to build comprehensive knowledge graphs. These graphs allow researchers to query complex relationships between genes, proteins, diseases, drugs, and environmental factors, facilitating new insights and hypothesis formation.

3.2. Autonomous Experimentation and Robotic Labs

The integration of AI with robotics is leading to the emergence of “self-driving” or “closed-loop” labs, where AI not only designs experiments but also executes them, analyzes the results, and iteratively refines subsequent experiments without human intervention.

- Materials Findy: AI-controlled robotic platforms can synthesize and test thousands of new materials candidates, accelerating the findy of materials with desired properties (e.g., superconductors, catalysts, battery components). This significantly reduces the time from concept to validation.

- Chemical Synthesis: Automated systems can optimize reaction conditions, find new synthetic routes, and perform complex multi-step syntheses, making chemical development faster and more reproducible.

3.3. Analysis of Complex and High-Dimensional Datasets

Modern scientific instruments generate unprecedented amounts of data, often with many variables (high-dimensional). AI, particularly deep learning, is uniquely suited to extract meaningful patterns from such complexity.

- Omics Data (Genomics, Proteomics, Metabolomics): AI algorithms are indispensable for analyzing vast omics datasets to identify biomarkers for disease, understand gene regulatory networks, and predict drug responses. This has profound implications for personalized medicine.

- Climate Science: AI is used to analyze climate models, satellite imagery, and sensor data to predict weather patterns, understand climate change impacts, and develop mitigation strategies. Its ability to find subtle correlations in chaotic systems is invaluable.

- Neuroscience: Analyzing complex brain imaging data (fMRI, EEG) to map brain activity, understand neurological disorders, and decode neural signals is a burgeoning area for AI application.

3.4. Scientific Software Development and Tooling

AI is also changing the very tools scientists use. AI-powered code assistants, data visualization tools, and simulation platforms are making research more efficient and accessible.

- Automated Code Generation and Debugging: AI helps researchers write and debug code for simulations and data analysis, accelerating the development of new computational models.

- Intelligent Data Visualization: AI can automatically identify key features in datasets and suggest optimal visualization techniques, making complex data more interpretable for human researchers.

- Improved Simulations: AI models can be integrated into traditional physics-based simulations (e.g., molecular dynamics) to accelerate calculations or learn complex interactions, leading to more accurate and faster simulations.

4. Future Implications of AI in Scientific Research

The trajectory of AI integration suggests a future where scientific findy is dramatically accelerated, democratized, and fundamentally transformed.

4.1. Accelerated Pace of Findy

The most profound implication is the potential for an unprecedented acceleration in the rate of scientific findy. AI’s ability to process, analyze, and synthesize information at scales far beyond human capacity will shorten the experimental cycle from hypothesis to validation.

- “Lights-Out” Labs: We could see the proliferation of fully autonomous research facilities that operate 24/7, continuously generating and testing hypotheses, optimizing experiments, and reporting findings, leading to findies at a pace unimaginable today.

- Convergent Research: AI’s capacity to identify connections across vastly different scientific domains will foster more interdisciplinary breakthroughs, blurring the traditional boundaries between fields like biology, chemistry, physics, and computer science.

4.2. Democratization of Scientific Research

AI can lower the barrier to entry for complex scientific endeavors, making sophisticated tools and analyses accessible to a broader range of researchers, including those in resource-limited settings.

- Accessible Expertise: AI tools can act as “virtual experts,” providing guidance on experimental design, data analysis, and interpretation, effectively democratizing access to high-level scientific expertise.

- Cost Reduction: By automating labor-intensive tasks and optimizing resource usage (e.g., minimizing reagent consumption in experiments), AI can significantly reduce the cost of research, enabling more impactful work with fewer financial constraints.

4.3. Improved Human-AI Collaboration

The future will likely not be one where AI replaces human scientists entirely, but rather where humans and AI form powerful symbiotic partnerships.

- Augmented Creativity: AI can serve as a powerful brainstorming partner, suggesting novel research directions, identifying overlooked variables, and challenging human assumptions, augmenting human creativity and intuition.

- Focus on Higher-Order Thinking: With AI handling repetitive and data-intensive tasks, human scientists can dedicate more time to critical thinking, conceptualization, ethical considerations, and the interpretation of complex AI-generated insights.

4.4. Predictive and Prescriptive Science

AI will increasingly enable science to move beyond descriptive and explanatory models to truly predictive and prescriptive capabilities.

- Personalized Interventions: In medicine, AI will refine personalized treatment plans based on an individual’s unique genetic makeup, lifestyle, and disease profile, leading to highly effective and custom interventions.

- Proactive Problem Solving: In environmental science, AI could predict ecological tipping points with greater accuracy, allowing for proactive interventions to prevent irreversible damage.

5. Challenges and Ethical Considerations

While the promise of AI in scientific research is immense, its widespread adoption also presents significant challenges and necessitates careful ethical considerations.

5.1. Challenges

- Data Quality and Bias: AI models are only as good as the data they are trained on. Biased, incomplete, or noisy data can lead to flawed conclusions, perpetuating existing biases or generating inaccurate hypotheses. Ensuring high-quality, representative, and unbiased datasets is a continuous challenge.

- Interpretability and Explainability (XAI): Many powerful AI models, particularly deep neural networks, operate as “black boxes,” making it difficult to understand why they arrive at a particular conclusion or hypothesis. In science, where reproducibility and understanding mechanisms are paramount, this lack of interpretability can hinder trust and adoption. Developing Explainable AI (XAI) methods is crucial.

- Validation and Reproducibility: AI-generated hypotheses or experimental designs need rigorous validation by human scientists. There’s a risk of “AI hallucinations” or spurious correlations that appear significant but lack true scientific basis. Ensuring the reproducibility of AI-assisted findies is paramount.

- Infrastructure and Expertise: Implementing sophisticated AI solutions requires significant computational infrastructure (e.g., high-performance computing, cloud resources) and specialized expertise in AI, data science, and the specific scientific domain. This can create disparities in research capabilities.

- Intellectual Property and Ownership: As AI systems generate novel findies, questions arise regarding intellectual property rights. Who owns the patent for a molecule finded by an AI? The programmer, the data provider, the institution, or the AI itself?

5.2. Ethical Considerations

- Responsibility and Accountability: If an AI system makes an error that leads to harm (e.g., a misdiagnosis, a failed drug trial), who is ultimately responsible? Establishing clear lines of accountability for AI-driven research outcomes is critical.

- Bias and Fairness: AI algorithms can inadvertently embed and amplify societal biases present in training data, leading to unfair or inequitable outcomes, particularly in fields like medicine where AI might inform treatment decisions. Ensuring fairness and mitigating bias is an ongoing ethical imperative.

- Human Oversight and Autonomy: As AI systems become more autonomous in designing and conducting experiments, defining the appropriate level of human oversight becomes crucial. How much autonomy should we grant AI in research that could have significant societal impacts?

- Misinformation and “Deepfakes” in Science: The generative capabilities of AI, while powerful for hypothesis generation, also raise concerns about the potential for generating convincing but false scientific results or “deepfaked” data, which could undermine trust in scientific findings.

- Job Displacement and Workforce Change: While AI augments human capabilities, it may also automate certain research tasks, potentially leading to shifts in the scientific workforce. Ethical considerations involve preparing the next generation of scientists for this evolving landscape.

- Dual-Use Dilemmas: Powerful AI tools, particularly those for molecular design or autonomous experimentation, could have dual-use potential, meaning they could be misused for harmful purposes (e.g., designing bioweapons). Responsible development and governance are essential.

6. Conclusion

The journey of AI in scientific research has evolved from rudimentary symbolic systems to sophisticated deep learning models capable of autonomous findy. This chronological progression highlights a continuous expansion of AI’s capabilities, moving from automating simple tasks to generating complex hypotheses and even independently conducting experiments. Today, AI is an indispensable partner across virtually all scientific disciplines, accelerating knowledge findy, enhancing data analysis, and changing experimental methodologies.

Looking ahead, the implications are profound. We anticipate an era of unprecedented findy, driven by autonomous labs and AI-augmented human intellect. The democratization of advanced research tools promises to broaden participation in scientific inquiry, fostering innovation globally. However, this transformative potential is tempered by significant challenges related to data quality, model interpretability, and the need for rigorous validation. Crucially, the ethical considerations surrounding bias, accountability, human oversight, and the dual-use nature of powerful AI tools demand proactive and thoughtful engagement from the scientific community, policymakers, and society at large.

The future of scientific research is undeniably intertwined with the continued development and responsible deployment of AI. By embracing its capabilities while diligently addressing its inherent complexities and ethical dilemmas, we can harness AI to push the boundaries of human knowledge further and faster than ever before, ultimately benefiting all of humanity. The symbiotic relationship between human ingenuity and artificial intelligence holds the key to open uping the next era of scientific enlightenment.